Could You Survive Life As A FaceBook Moderator?

Disclosure: Your support helps keep the site running! We earn a referral fee for some of the services we recommend on this page. Learn more

Ever wonder why YouTube has such terrible comments?

It seems like the commenting community is made up of the worst trolls on the Internet. They’re so bad, YouTube has been called “a comment disaster on an unprecedented scale.”

But why YouTube? Lots of people watch videos online, and most of them aren’t jerks (we hope). There are other plenty of other social networks and content sharing platforms on the web with just as many users, and even many with similar demographics.

The truth is, many of those other sites would be just like YouTube, if it wasn’t for professional content moderators.

While the Internet is a wonderful thing, the anonymity it provides can sometimes bring out the worst in people. The phenomenon even has a name: the “online disinhibition effect,” where anonymity increased unethical behavior. Unlike in real life, the anonymity of the Internet protects people from the consequences of bad behavior, such as losing friends or gaining a bad reputation.

Many websites strive to provide the best possible environment for their users, so that people will keep coming back to the site. They want their visitors to feel safe, and to not be exposed to inappropriate content.

In order to do that, many of them use professional content moderators to sort through reported and flagged content and decide what’s inappropriate.

Social media moderation is a booming business. There are many firms that specialize in it, who often outsource the work cheaply to workers in India, the Philippines, and other countries with a lower cost of living. There are content moderators in many countries, though, with recent college graduates making up the bulk of them in the United States.

How many moderators does it take to make Facebook feel safe?

Shockingly, one third of Facebook’s entire workforce.

You might think you have it bad when your Facebook feed is full of clickbait articles and Candy Crush invites. But professional content moderators have it much, much worse. It’s no wonder they often suffer from PTSD, and last only an average of a couple of months on the job.

Wondering exactly what happens when you report something in your Facebook feed? Here’s how the people behind the machine take care of it.

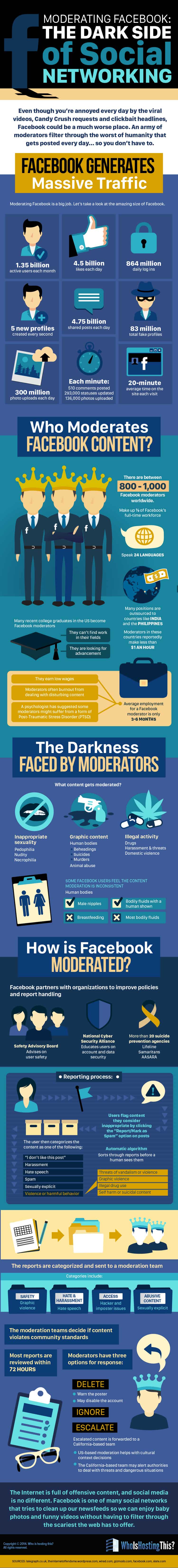

Moderating Facebook: The Dark Side of Social Networking

Even though you’re annoyed every day by the viral videos, Candy Crush requests and clickbait headlines, Facebook could be a much worse place. An army of moderators filter through the worst of humanity that gets posted every day… so you don’t have to.

Facebook Generates Massive Traffic

Moderating Facebook is a big job. Let’s take a look at the amazing size of Facebook.

- 35 billion active users each month

- 5 billion likes each day

- 864 million daily log ins

- 5 new profiles created every second

- 83 million total fake profiles

- 300 million photo uploads each day

- 75 billion shared posts each day

- 20-minute average time on the site each visit

- Each minute:

- 510 comments posted

- 293,000 statuses updated

- 136,000 photos uploaded

Who Moderates Facebook Content?

There are between 800-1,000 Facebook moderators worldwide

- They make up 1/3 of Facebook’s full-time workforce

- They speak 24 languages

- Many positions are outsourced to countries like India and the Philippines

- Moderators in these countries may reportedly make less than $1 an hour

- Many recent college graduates in the US become Facebook moderators

- They can’t find work in their fields

- They are looking for advancement

- Average employment for a Facebook moderator is only 3-6 months

- They earn low wages

- Moderators often burnout from dealing with disturbing content

- A psychologist has suggested some moderators might suffer from a form of Post-Traumatic Stress Disorder (PTSD)

The Darkness Faced by Moderators

What content gets moderated?

- Inappropriate sexuality

- Pedophilia

- Nudity

- Necrophilia

- Graphic content

- Human bodies

- Beheadings

- Suicides

- Murders

- Animal abuse

- Human bodies

- Illegal activity

- Drugs

- Harassment and threats

- Domestic violence

- Some Facebook users feel the content moderation is inconsistent.

- Okay – male nipples

- Not okay – breastfeeding

- Okay – most bodily fluids

- Not okay – bodily fluids with a human shown

How is Facebook Moderated?

Facebook partners with organizations to improve policies and report handling.

- Safety Advisory Board

- Advises on user safety

- National Cyber Security Alliance

- Educates users on account and data security

- More than 20 suicide prevention agencies

- Lifeline

- Samaritans

- AASARA

Reporting process:

Users flag content they consider inappropriate by clicking the “Report/Mark as Spam” option on posts

The user then categorizes the content as one of the following:

- “I don’t like this post”

- Harassment

- Hate speech

- Spam

- Sexually explicit

- Violence or harmful behavior

- Threats of vandalism or violence

- Graphic violence

- Illegal drug use

- Self harm or suicidal content

Automatic algorithm

- Sorts through reports before a human sees them.

The reports are categorized and sent to a moderation team.

- Categories include:

- Safety

- Graphic violence

- Hate and harassment

- Hate speech

- Access

- Hacker and imposter issues

- Abusive content

- Sexually explicit

The moderation teams decide if content violates community standards.

Moderators have three options for response:

- Ignore

- Delete

- Warn the poster

- May disable the account

- Escalate

- Escalated content is forwarded to a California-based team

- US-based moderation helps with cultural context decisions

- The California-based team may alert authorities to deal with threats and dangerous situations

Most reports are reviewed within 72 hours.

The Internet is full of offensive content, and social media is no different. Facebook is one of many social networks that tries to clean up our newsfeeds so we can enjoy baby photos and funny videos without having to filter through the scariest the web has to offer.

Sources

- The Top 20 Valuable Facebook Statistics – zephoria.com

- The Dark Side of Facebook – telegraph.co.uk

- The Real Story Behind Facebook Moderation and Your Petty Reports – theinternetoffendsme.wordpress.com

- The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed – wired.com

- The Horrifying Job of Facebook Moderators – gizmodo.com

- What Happens After You Click “Report” – facebook.com

- An Army of Workers Overseas Suffer the Worst of the Internet So You Don’t Have To – slate.com

Thomas Lipscomb

July 26, 2015

Can you imagine a DUMBER idea than choosing recent ” politically correct ” college grads, all atwitter with “free speech zones” and “trigger warnings,” or foreigners with no concept of the First Amendment, as censor/moderators of a forum like FaceBook.

I can. Give them the authority to delete posters WITH NO APPEAL.

That is the system FaceBook has in place. I know from personal experience.

You’d have to be a recent Progressive graduate of Harvard to think it makes any sense at all.

For Zuckerberg, and your audience’s information: The entire US was turned into a “free speech zone” with the passage of the Bill of Rights.

If you have commercial reasons to want to moderate it, you’d better put in some checks and balances.

Thomas Lipscomb

July 26, 2015

Ironically, the “moderating/censorship” problem on sites like FaceBook and Reddit is amazingly easy to deal with.

Insure posters take responsibility for their postings by allowing NO pseudonyms. Very few abusive posts come from real posters. The vast majority come from postings under pseudonyms.

Eliminate the camouflage for abusive posting, and the number of judgment calls for clearly underqualified recent college grad snowflakes and foreign coolie labor drops substantially.

Rather than showing sympathy for the stupid polices at FaceBook et al “WHO IS HOSTING THIS” might do a service by showing alternative ways of better achieving the desired result.

Just because the Internet has been tolerating pseudonym posting, even at newspaper and press sites, for far too long, that doesn’t mean a policy of taking responsibility for postings by doing so under one’s own name with a proven identify isn’t far more in the spirit of the First Amendment. Rights come with responsibilities. We don’t need some “authority” to act to insure we exercise “proper discretion.” That is just piling on another abuse against freedom of speech. Let the abusive posters catch hell from their peers.

And the fact there is NO APPEAL from the decision of these woefully underqualified “moderator/censors” makes a change in policy imperative.

Kimian111

August 24, 2016

I would just like to challenge the sentiment of those who think that moderation is done by underqualified moderators. These moderators don’t use their personal opinions to filter content. They go by a specific guideline. But even so, I would still like to challenge anyone who isn’t a sick, cruel, heartless human to continue to assassinate the characters of those who put themselves at risk to do the moderating. I highly doubt that you could do that job. Do you understand how sickening and diabolical so many people are who brag about it and video tape it and proudly boast it on social media for the purpose of challenging sicker people to top it? You spend 1 day in the moderators shoes and I guarantee your life will be permanently damaged by the content you see, then review your smack talk and stand up for the 1st amendment rights (which I’m pretty sure you have enjoyed exercising without any abusive repercussions your entire life) like that is the concern here. When you are forced to see what the sicko breed of humans are doing daily and dreaming about it when they are not doing it, you will bite your tongue and wish you never even spoke up. That is the sign of the other human breed, the comfortable, entitled, ignorant ones who live within padded, shielded walls and think every problem can only get as bad as they have witnessed. You have NO idea what you are protected from knowing about, seeing or experiencing. None.

This is life-changing, and it will change your soap-boxing, complaining, invalidating, condescending mouth forever. Trust me, or walk right in there and prove me right. I am not being nasty, I am just telling you in no uncertain terms that what you said indicated that you are totally unaware and I’m afraid you will influence other ignorant people to feel the same. It’s not a joke, it’s sick. Sicker than you can even think, and it happens continually with no stop in sight. These moderators may never heal from what they realize. Next time, you might be crying out to save our children from this kind of exposure right at the start of their bright futures. Who do you think is qualified to moderate you stuff for you to see or not see? If you were a moderator, how would you be any different with blocking things that personally offend YOU and allowing things that don’t bother YOU? Come on, maybe you were having a rough day; nonetheless, I stress that NO ONE should have to see the sickness of disgusting low-life humans and what they do for kicks. There is no possible argument about it. If you feel you need to, I suspect you have not been subjected to the material in question, or else you have been, and you are one of the ones who enjoy torturing people and animals and showing every excruciating moment of pain for entertainment.

Mary Ann Redfern

August 30, 2016

Don’t tell ME that FB moderators never moderate from a position of personal beliefs. I have experienced such abuse from FB moderators too many times not to recognize that they sometimes moderate from their own personal emotions. You will NEVER convince me otherwise at this point. Don’t even try.